-

habitations

End products, habiting difference

Monday July 22, 2024It’s Wednesday evening. Incandescence lazes out from the rectangle in the southeast corner of my home work station. Zen Master Bon Seong, a fiery bushel on screen, a big circle face in the biggest square. He is using the figurative Zen Stick to short-circuit our cognition. He is fond of saying things like “You are stardust” or “What is I?”.

Jeff breathes hot air through the broom on his face. Those bits go through audio processing and seem to emanate from nowhere around the work station. “The infinite expansion of the same thing” is uttered. We’re discussing how people can interpret the same phenomena differently. As we say: “The whole world is made by mind alone.” And: “The whole world is a single flower.” As the Heart Sutra says: “form is emptiness, emptiness is form”. You cough. I’m startled. I freeze. I think you have COVID. I think I’m going to get it. Then get long COVID. Someone else sees you cough, they think it’s a sneeze and continue on. Perhaps it is.

The dharma talk continues. There’s nothing wrong with our kaleidoscope of perspectives. They are regular. This is the human mind being itself, resting in its station, but painting a tale on snake, coloring it’s ridiculous karma all over “the small box” that is our little piece of understanding of the universe.

Gosh, we’re talking about boxes from within boxes. Our thinking creates this separate, enclosed being. (Consciousness bound by a skin sack? Not today, the microbiome field transforms you. (Sigh. No help lugging our sacks and their biomic nerve fields closer together. We have decades of men making the Bay car-friendly, but I can’t get across conveniently. I can’t get to Berkeley from Potrero without spending as much time commuting as the duration sitting meditation with the other sacks on for Wednesday night dharma talks.)) The problem is getting attached to difference. Not understanding our “universal substance” – what we say as a convenience. That’s why Zen is about returning to before thinking, accessing the truth before difference. So maybe instead: you cough, then I hand you a tissue.

I don’t want this post to be about the negatives of the human mind’s proclivity to be a multiversal agent. It’s not really a negative, is it? It’s a matter of fact about our minds as atoms floating in space. Space dust. So, instead: let’s relish in the generative creativities that difference making unleashes when multiple human minds are applied to a problem of interpretation.

Zen students say: “All things return to the one.” Once again, I have returned to software. One of my favorite ones for sifting life’s exposures. Nonetheless, I’m thinking about difference. The way the one refracts and playfully escapes. Our turn here will be akin to Jewish monotheism – a personal return to spiritual foundations – a spiritual tradition where the only official doctrine claims one god but there’s no consensus about what that means.

An often-cited medieval midrash asserts that each of the Israelites at Mount Sinai heart God’s revelation differently according to their own capacity to understand it. They each had their own individual experience of the Divine. – Sarah Hurwitz, Here All Along

A long time passed but the Buddha did not open his mouth to say a single word. He then reached down, picked up a flower and, without a word, held the flower aloft. Nobody in the assembly understood what the Buddha meant by this action. Only Mahakasyapa, sitting at the far back, smiled.

But not everyone smiled!

This “problem” of software: the epistemological endeavor to solve challenges in the world through reasoning about what’s actually happening out there and interpreting it into binary logics, electrical pulses: of taking a shared thought among humans – sometimes only the wispy thrust of a thought – and converting it to instructions for the computer. Zen Master Seung Sahn used to say you have to “digest your mind meal”, your understanding. Making software is a kind of digestion of understanding. Pushing thought stuff through a cheese cloth named Divine.

The software process is messy, from beginning to middle to end. Which is why, as Zen student, I must utter: Where does the one return? Software’s interpretive act is a mix up, a Deleuzian differencing that flies a-face a unifying core concept. That’s why software is never completed, or doesn’t seem to exist anywhere in particular. Is it the text in your editor, the code running on the server, the git history, the button that is pressed in a browser, the heavy metals of the memory disk? The lines between human, software, software process, the hardware it runs on, the “world out there” the software performs for…not the cleanest of lines, but lines in flight.

I want to argue here that the distinction between the software system and its development–that is, between the process and product–is another “point of opacity” of software engineering since, albeit necessary, it cannot be kept up at all times. – Federica Frabetti, Software Theory

Software is subversive to hard-dick narratives. It’s feminist, it’s counter-cultural in its essence: which is why gate-keepers try to wrest it away from the hacker collective and make it property. The wisp of generative artificial intelligence hallucinatory resonance is hardly surprising, it’s a re-run run amok. Where does the one return inside your algorithmic nebula? Indeed.

Messy. Material. Just as digestion is wont to be, from mouth mashing through shitting. (Thankfully this physical body process is mostly obscured by our containment sacks.) The metaphor is apt. The world is messy as it is, and software is in it, made from it, made for it. This is why the end product of the software process inspires programmers of acrimonious humor, sometimes of low emotional intelligence, or of self-deprecatedness, or simply exasperated, exhausted… these programmers must declare that which comes from the butt. “This code is crap.” “Who wrote this shit code?”

It’s generally a pessimistic view to call code crap. I’d use “compost” if we needed a corresponding brownish, molten substance for a brighter horizon effluent cream - a regenerative solar punk version. Or something like “the best with what we had.” We need less snark in the industry, more heart.

Thankfully (we can breath a sigh) there are loving defenders of our end products out there. Rachel Kroll is one, synonymously online as rachelbythebay. She emancipates some lines (of code) and litigates her own “crap code” through a simulated trial judged by such an exasperated panel, and then performs my favorite type of “if I knew now what I knew then” refactor blogging with a procedural splunk. It’s a beautiful example of husbanding thought stuff a second time through.

Similar to verbalized language, programming languages provide multiplicities for getting thoughts out whether through your face broom or unadulterated cheeks and lips. Adult English speakers would not be surprised to hear the the idea of reversal expressed by the variations “they retraced their steps”, “they went back”, “they returned”. Back to computers: the “high level” Ruby programming language provides a relatively expressive syntax with multiple lexical pathways for computer instruction. While the variations in high level programming languages like Ruby may not be imbued with the rich cultural context of spoken English phrases, they demonstrate what I’ve always found to be a never gets old trait of programming since the recent invention of modern programming languages now used in heterogeneous postmodern programming environments: there is creative choice!

Similar to English speakers, Ruby programmers will follow contextual precedence, and are bounded by the limits of the syntax. And yet, when going about telling a computer to reverse something, they can still surprise us.

Let’s say a Rubyist wants to reverse a word, known in programming parlance as a “string”: any sequence of characters (letters, numbers, symbols). This rubyist might decide to key punch a common implementation using more “imperative” syntax constructs like a

while loop,indexed lookups, andstring concatenation. Synonymously referred to as language primitives, imperative expressions are more verbose, but yield more control and flexibility back to the programmer. To wit:counter_index = original_word.length # create empty string to contain new reversed word reversed_word = "" # start processing reversal while counter_index > 0 # decrement the counter counter_index = counter_index - 1 current_letter = original_word[counter_index] # add letter to the result reversed_word << current_letter endThat’s one option. But looping over a string is such a common operation that Ruby provides some convenience to do this. In place of a while loop, index references, esoteric symbols like

<<, Ruby let’s us slurp all that imperative code into higher order string methods likeeach_char– as in “loop over each character” – andprepend(self explanatory, I hope). Under the hood, Ruby implements these methods with it’s own primitives, likely similar to what we’ve written above. Let’s try it:reversed_word = "" original_word.each_char do |letter| # optional debuggers # puts letter # puts reversed reversed_word(letter) endThis code is considered easier to read by many programmers because the method names already describe the code’s intent. There’s also less code to mash in the mouth, therefore less information to pack into the brain’s working memory.

Ruby’s

do blocksyntax which yields control over the looped character logically bounds our new string construction procedure in a semantically associative workspace. Coding with the word. Somewhat different than the unhinged free jazz of a while loop block with throw-away variables.A Rubyist interested in a concise way to “one line” this operation might want to do all the work in place without variable initialization. There’s this:

original_word .chars .each_with_object("") { |index, reversed_word| reversed_word.prepend(index) } .joinTransforming the original word string back and forth from an array to feed the

each_with_objectpiles on the syntax, but this is useful for writing code in a more functional style.Of course, who even has all that time on their hands to play around. Let’s just make Ruby do all the work for us:

original_word.reverseIn fact, we often have more time, because software needs to last. Therefore it’s not uncommon to see computational code like this registered into a system of collaborators as a member of a reversing machine that can receive messages for requests like

reverse_my_word:class WordReverseMachine def initialize(word) @word = word end def reverse @word.reverse end end # Somewhere else in my system... WordReverseMachine.new(word).reverseAlthough, maybe my

WordReverserMachineshouldn’t be so opinionated by how to reverse things. Perhaps I want to borrow a reverse engine from other distant cyberscapes. Let’s allow our machine to swap engines as needed:class WordReverseMachine def initialize(word, reverse_engine) @word = word @reverse_engine = reverse_engine end def reverse if reverse_engine? @reverse_engine.new(@word) else word.reverse end end def reverse_engine? @reverser.nil? end end # Somewhere else in my sytem... WordReverseMachine.new(word).reverseReversing a word is a simplistic task, which is why languages like Ruby come with a

reversemethod on allStringobjects out of the box. And yet, programmers can dream wildly different expressions as demonstrated above.Let’s take a look at the diversity of expression that unfolds from interpreting a task in a more culturally rich and relevant problem space. This is one is from an actual mess in the wild – unruly realism – where the task at hand is to look at all the products currently on sale in a restaurant website product inventory database that are not assigned to any particular menu.

Ok: so imagine this shop management software lets managers add products, but wait till later to figure out what menus those products go in. After all, does a croissant go in Breakfast or Pastries? It may not be obvious at first. Splitting up the administration of products this way creates efficiency for product inventory management and menu design. Which allows for more general flexibility and adaptability of a restaurant’s ordering experience. It lets a shop not use menus at all, or have some products in menus and some just floating on their own as standalone products.

These requirements imply that software developers working with this database will need to care about querying for products in a few different ways. Let’s play with a use case where a restaurant manager wants to display a website menu with products that are both in menus as well as a section for all other products. For example, there might be menus for Breakfast, Lunch, Dinner, and then a general list of other products under an…“Other” heading.

The software that supports this shop management software implements this data model for relating products to menus:

Marketplace Productshave manyMarketplace Tags, and these two data types are associated with each other through a bridge table ofMarketplace Product Tags. This is a fairly typical way of modeling a tagging system in a Ruby on Rails project with a relational database. Here’s what the model definitions might look like in Rails code leveragingActiveRecordas an ORM:# Product Model class Marketplace::Product has_many :tags, through: :product_tags, inverse_of: :products end # Tag Model class Marketplace::Tag has_many :product_tags, inverse_of: :tag, dependent: :destroy has_many :products, through: :product_tags, inverse_of: :tags scope :menu_tag, -> { where(is_menu: true) } scope :not_menu, -> { where(is_menu: false) } endOk: so menus, or groups of products, are formed by products sharing a

Marketplace Tagwhere a special boolean field calledis_menuis set to true on that tag.For our case, where this restaurant’s ordering page lists both products in menus and the rest beneath an “Other” heading, querying the right content for our others is the tricky part. This system allows shop managers to tag products freely. There is no restricted set of tags, or other constraints for product and tag associations. Therefore we’ll need to join across three different tables,

marketplace_products,marketplace_products_tags, andmarketplace_tags, and then collect anyMarketplace Productswhere none of their associatedmarketplace_tagshave anis_menuflag set toTRUE.Lets start the beautiful differencing!

With the help of my fellow coders at Zinc Coop with whom I workshopped this problem, I’ve cooked three ways to query our postgres database for products without a menu tag with both postgres-flavored SQL and ActiveRecord ORM. (More ways left to be discovered.)

Even if you’ve got a mature ORM like ActiveRecord at hand, it can be pragmatic to express your query in SQL so the selection logic you enshrine in code is blatantly evident. What if, for our initial cook, we code with oneness. Get the data with a single select statement. Not necessarily bravado, or premature consideration for performance characteristics just yet. Let’s see what we can do with that generative single origin

selectstart point.Mario Kart starting line count down anticipation…

select mp.* from marketplace_products mp full join marketplace_product_tags mpt on mpt.product_id = mp.id full join marketplace_tags mt on mt.id = mpt.tag_id where mp.marketplace_id = '#{id}' group by mp.id having not 't' = any(array_agg(mt.is_menu));The cleverness on the last line creates a collection of boolean markers for each

Marketplace Product–torf– representing whether the correspondingMarketplace Tagis a menu tag. The resulting row would look like{f, f, t, f}. Moving out left from the aggregate function, a filter computation is applied against the collection for any existence of a TRUE/tflag. The lispy parentheticals are sleek!Thought: while the above option foregoes a subquery, I find the combination of

group byandhavingclauses with the aggregating semantics notionally similar to a subquery insofar as we are selecting over a secondary “view” into the data.It’s dawning on us: getting all the products without menu tags is not complex, although it may have seemed tricky at the jump. We’re only narrowing the product result set based on a single condition, it’s just that the condition happens to live a distance away. Let’s try again without the single select cleverness and consider nested queries:

select mp.* from marketplace_products mp where mp.marketplace_id = '#{id}' AND mp.id not in( select mp.id from marketplace_products mp inner join marketplace_product_tags mpt on mpt.product_id = mp.id inner join marketplace_tags mt on mt.id = mpt.tag_id where mt.is_menu = true);Because we now know that this query is shallow, both wide across only a few tables, and deep with only a single subquery, let’s see if Active Record’s query interface can provide similar conveniences like we achieved earlier with Ruby in our toy string reversal fractals above. As it turns out, we can one-line all that SQL!:

Marketplace::Product.where.not(id: joins(:tags).merge(Tag.where(is_menu: true)))This is slick, but might not be easy to come by without our previous work or deep knowledge of ActiveRecord. Our subquery version is informative for getting a mental handle on this terseness. We can reverse engineer. Notice how the entire subquery is captured in the outer parens pair. Now: the need for

mergeand passing in theTagmodel as the argument is a bit elusive because we might expect to be able to simplyjoins(:tags).where(is_menu: true). But this would error because Active Record would think we were applying thewhereclause toProductinstead ofTag, despite chaining on the join.mergeis a logical separator that let’s us filter across these two relations with more discreet semantics.Refine. Refine.

We can utilize the

is_menuscope on our Tag class, hiding ourwhere(is_menu: true)filter behind a shorthand that minimizes keystrokes:Marketplace::Product.where.not(id: joins(:tags).merge(Tag.is_menu))Ruby on Rails scopes are a brevity blessing. You can imagine where this might be going. Adding a scope for

without_menu_tagon Product will make querying and displaying results on our restaurant odering page a triviality. Dropping into the view layer we can grab the data and splat it into components with human-readable phrasing:<h1>Other</h1> <div class="grid lg:grid-cols-3 gap-3"> <%- marketplace.products.without_menu_tag.each do |product| %> <%= render Marketplace::Menu::ProductComponent.new(product:, cart:) %> <%- end %> </div>The

without_menu_tagscope can play well with other chainable scopes for quick prototyping and iteration for restaurant managers.<h1>Other</h1> <div class="grid lg:grid-cols-3 gap-3"> <%- marketplace.products.unarchived.without_menu_tag.sort_alpha.each do |product| %> <%= render Marketplace::Menu::ProductComponent.new(product:, cart:) %> <%- end %> </div>How shitty is that?

Mind meal tests your mind: do you go for the bait? If you check the Ten Gates, the ten kong-ans, does hungry mind, desire mind, not-enough-mind appear? If so, you must eat your mind meal. You must completely digest your understanding. Then finishing your mind meal is possible. Then you get enough-mind, no-hindrance mind, no I-myme mind. Enough-mind does not go for the bait, so everything is clear and you can perceive any situation in your life and kong-an clearly, and save all beings. – A DOG’S “WOOOF WOOF” IS BETTER THAN ZEN MASTER JOJU, Only Don’t Know by Zen Master Seung Sahn

-

habitations

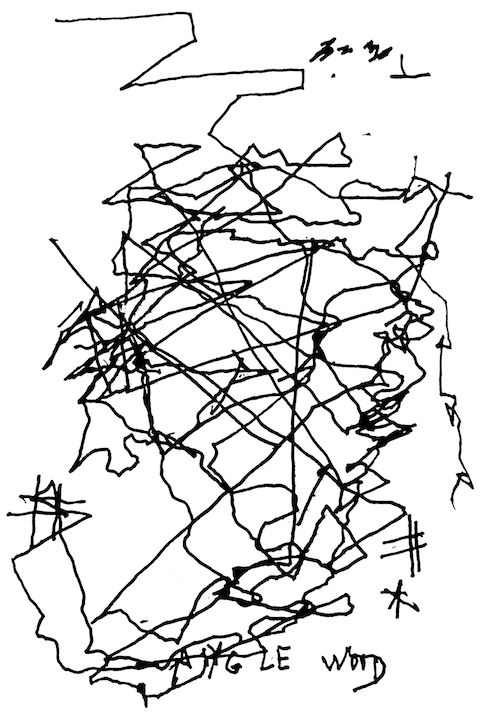

My sparse margin note couplet from Jon Bentley's Programming Pearls, also bit of a book review, and a whole ass

Saturday December 23, 2023

In 2015 I bought this book. 7, or 8 years ago. Year one into my software career. This book is canon. I've had little use for its studies of algorithms. # thus far, see below I don't think Bentley would be offended. First sentence: "Computer programming has many faces."I’m sure I glanced at its pages before entering the corporate interview gauntlet, to get in the vigilante mood. Like shaking out the shoulders when staring down a twenty two minute treadmill run (or pile of laundry Sunday evening). (You must to armor up for the algos in the kingdom of men.)

You know, Bentley’s pearls are usually the type of polished wisdom earned through real life experience I drift with, but this book never became the vade mecum for my quotidian affairs. Is it for others? Most of it, after Part I, was too metallic for an early-career front-end Rails dev. But the book can quickly be pragmatic in the earlier pages – see 3.2 Form-Letter Programming!. Templating is up there with the best of them.

The book is pedagogically caring (great headings). It’s pornographically geeked in thoroughness. The cases are so well-situated. Bentley spoils you with opinionated further reading and subjective sidebars that add the kind of first-hand-account historical/social context that remind you that the metal has been worked over by person hands.

It reminds you that software is not lonely.

Look out, there’s a modest amount of myth-making for his contemporaries and mentors. But who wouldn’t. The most honest plagiarisms. Yes/and he’s humbler than many. Ok maybe I have to read it again now that I have more years behind me.

Although I am a visual learner and thinker: logical word-problems were always a pain. In recent years I tend to reach for Bhargava’s Grokking Algorithms for a refresher on binary search or walking trees. It has cute pictures. It’s stacked next to other faves like Land of Lisp and Why’s (Poignant) Guide to Ruby.

Hmmm (thinking emoji, I will not look you in the eye as I cock my head buried in thots). After all the solstice konmari of my bookshelf – which I thought would elicit a reflection on some forgotten paper taking up space – has become the discovery of lost treasure. And yet, I’m still tickled by the scant amount of notes taken. I can’t read without a pen. Perhaps we’re time-traveling, then.

It's year one. Young Rails dev. He makes only two liner notes in a book considered staple grocery. I don't remember if I even read the whole thing. I bet I got through Part 1's wider lens, then started to gloss over.One note is an exaggerated check mark next to this principle listed on p29 (Second Edition, published 2000):

Rework repeated code into arrays. A long stretch of similar code is often best expressed by the simplest of data structures, the array.

With “the array” also underlined from the outer left edge of the “t” to the outer right edge of the last “a.”

This one has stuck. Arrays, especially maps, can be more durable when staring down change. Before long any thoughtful software developer will realize that the components of a software system will become ordered and/or multiple.

There is no lonely software.

Data, whether as scalar, “bags” of properties, even subroutines and processes, even the manual lever pulling human tasks must be sequenced, serial, bound, reversed, indexed, sorted. The maintenance cost of housing data in ordered collections is generally low.

[<anything>, <anything>]Whole ass languages, like Clojure, were designed to favor ordered, associative arrays. As I learned after finding Hickey’s seminal talk Simple Made Easy. (Richly simple and luxurious as Hockney’s bathers.)

A second mark: a vertical line next to the opener for section 12.2 One Solution. This was (yet another moment in my lifetime) I learned that we never have to go it alone when staring down the void of what we’re building next:

As soon as we settled on the problem to be solved, I ran to my nearest copy of Knuth’s Seminumerical Algorithms (having copies of Knuth’s three volumes both at home and at work has been well worth the investment).

This has also stuck, reified after seasons.

-

habitations

Tuesday November 21, 2023But night software is not like that. It’s not written for the day job. It’s not written to see the light of day at all. It’s not written to be looked at and scrutinized by anybody. It’s intimate and personal, it’s messy and buggy. To take a look is to transgress.

Boundary cross. Sex.

From a small heap software that helps make software. By way of Devine Lu Linvega

-

habitations

DEI Grieving and AI Skepticism

Tuesday November 21, 2023IYKYK DEI in tech is all but dead. If you’re an outsider, it’s important to understand that employee-led efforts to make workplaces less racist – which began in earnest at the onset of the Obama years – have been steadily blotted out since Trump. The Tech aristocracy has decided to abandon DEI budgets along with other workplace perks. As @betsythemuffin notes (in the thread I’ll be referencing below):

DEI is seen by a sizable chunk of the funder class as an allowable luxury to keep the peons happy.

It has been tragically hard to swallow this defeat. @betsythemuffin again:

Regret that we didn’t do more to build material power when we had the chance.

And yet, as the movement has regrouped and recentered to reposition the struggle, some have begun to take note of a problematic adaptation in the techy left’s rhetoric. @danilo started a thread on Mastodon that points out a new-born form of absolutist skepticism for emerging technologies – notably AI (of course). Regardless of why the left slips into what @glyph calls a problem of “semasiology” around the term “AI”, point is, the hurt seems to be cutting so deep that the movement has retreated into an unproductive preservationism.

The abject and understandably heartbreaking defeat of DEI-type progress in tech has moved most leftist critique of the space into a technological conservatism built on denialist, magical thinking.

You can’t successfully advocate for a dead end when the other side is investing in roads that lead to helpful places.

You can’t win against technologies people find useful by pretending they aren’t useful.

Check out the rest of the conversation. There are smart people in here thinking through this “AI” moment. The underlying question seems to be how we can continue the fight without completely disavowing what might be practical revolutions borne out by this albeit early phase of “AI” that is “finally bearing serious fruit.”

If you’re wondering on what side of the line I fall, my major beef with the LLMs is their wanton extractive consumption of human labor without citation or, god forbid, recompense. Of course, a time honored tradition of slave traders and capitalists. Here is Marc Andreesen with a recent plea written to the US copyright office, hopeful we can just all ignore this blatant theft for the common good of the funder class. This is class warfare:

Imposing the cost of actual or potential copyright liability on the creators of AI models will either kill or significantly hamper their development.

I hold that citation is feminist since it combats the authoritative mansplain that obscures collaboration, hardens selfhood, and hoards ideas. Ergo I consider the de-linkified, referent vacuum of effluvium produced by ChatGPT et al as patriarchal in its presentation of “knowledge” and preoccupation with mastery. I’m scare quoting because philosophically I’m confused about what this content even is, so unhinged. Although I recognize that the interplay of this text with the reader and their investigative context does produce meaning.

UPDATE (minutes after the writing the above): heh, Danilo actually expounds generously about the left’s false critique of AI in a long-form piece on his blog.

-

habitations

Lament for Tafteria: sailing the Apollo's docs

Saturday November 11, 2023

Documentation, naming things. Hard. Let’s see this hardness compound at the intersection of these two phenomena in the real world.

I’m casually scanning the Apollo docs, reading up on subscriptions because my wife is writing graphql client code at work. (I had vague memories of how we worked with distributed event streaming at my last job. NestJS + Kafka. We had consolidated apis into a graphql layer, but worked with Kafka event streams through Nest with the

kafka-jslib as a bridge. Point is, I wanted a light refreshment of how this stuff works.).It’s a frustrating moment to jump between these two pieces of the documentation. (This is supposed to be a mature framework (sobbing, why can’t we have nice things)). From 2. Initialize a GraphQLWsLink to Enabling subscriptions.

The former section, which walks us through client setup, provides a callout with a detour into the server docs for grabbing the connection url we need for configuring and instantiating our web socket linkage.

Replace the value of the url option with your GraphQL server’s subscription-specific WebSocket endpoint. If you’re using Apollo Server, see Setting a subscription endpoint.

If we are using Apollo’s server, then we must pass a

urlproperty when creating and instantiating theGraphQLWsLinkobject .const wsLink = new GraphQLWsLink(createClient({ url: 'ws://localhost:4000/subscriptions', }));HOWEVER, once we hyperlink to the server docs one simply cannot find – throughout the entire web document – a mention of “endpoint.” We’re dropped into a section called “Enabliing subscriptions” – instruction for setting up the server side bits.

Let down. Expectations missed. Problematic asymmetry, lack of consistency!

Repletely kerfuffled, my synapses delivering a reminiscence of Tom Benner’s big bird bound Naming Things, and his chapter on Consistency. (This so happens to be one of my favorite dogmas of Better Naming™️.) Well, the Apollo docs demonstrate a similar symptom of bad naming, just like the kinds of bad examples we oft find in code. Join me in considering disparate, hyperlinked documents as analogs to components of a software program that exchange messages. Readers of these texts suffer the bad naming with familiar symptoms: frustration. Capitalists lose the most with anemic productivity.

If the hyperlink is a documentation document-as-component api/interface, us readers should be allowed to charismatically move back and forth without being flung out from our flow state like starships intercepted in warp. Perhaps the doc could send me to a subsection of “Enabling Subscriptions” titled/anchored with “Server endpoint” (or the like). My instinct is that there’s a deeper scoop here.

Of course, there’s an imperfect system to blame. When Trevor Scheer removed all mention of “endpoint” in the docs update preparing users for Apollo Server version 3 back in 2021 – Apollo Server would no longer include inherent support for websocket protocols – how closely did he collaborate with Stephen Barlow who initially committed the instructions referencing endpoints a year or so before. Do the people matter? (They do.) And from under whatever constraints they perform these literary efforts. We can wonder, imagine, daydream about the socio-technical system that produces docs for clients and servers, and how the basic capabilities of the web link them together. The bounded contexts. The challenges of producing universal languages, ubiquitous terms across what likely are two disparate teams.

There are some leaky abstractions, but it’s not a total system failure.

I’m reading Bax.

Dispatches, bulletins, papers, pinned up, spewed about The Hospital Ship.

Page 15: A chronic shortage of pins to fix up the reports, so that in consequence they blrew away and anyone in the stern of the ship could reach out a hand and collect bulletins from the air as they drifted by in an endless paper-chase over the stern of the boat and on into the sea.

Page 98: Did the programmers hope to attract some reply? The

Hopefuldid not know what replay to make so they transmitted their call signal only, but there was no response.Euan wants to blame Tafteria, too bad.

-

habitations

Closure was small, TypeScript is big (thinking w/ Dan Vanderkam)

Wednesday November 1, 2023

The dangers of premature optimization are well understood among software engineers who’ve been frustrated when the secondary effects of the efforts end up compounding maintenance cost. (Does this principle play out in other milieu?). What’s perhaps more interesting to ponder is when “advanced optimizations” are decidedly beneficial but cannot be implemented because of larger system and social dynamics that require greater interop. Here I’m thinking with Dan Vanderkam’s post from back in Sept The Saga of the Closure Compiler, and Why TypeScript Won.

Dan skips gingerly back through recent web/computing history to discuss the (obvious in 20/20) demise of Google’s Closure compiler. The hardware of the early aughts demanded Closure’s designers focus on minification. In contrast, if the typed project(s) (TypeScript) of today are going to succeed beyond niche usage, they must play well with an expansive ecosystem of <other people’s code (le sigh!) which might not be typed. Despite the heroic campaigns of developers to slap types on their everythings (

DefinitelyTyped), the JavaScript universe offers no gaurantees to makes of tools. TypeScripts declared horizon ofBe a cross-platform development tool

must be joined by

Emit clean, idiomatic, recognizable JavaScript code

and further qualified by the non-goal of

Aggressively optimize the runtime performance of programs. Instead, emit idiomatic JavaScript code that plays well with the performance characteristics of runtime platforms.

I wonder how many TypeScript developers have looked at the TypeScript Design Goals? Not to call out/in, just curious.

I wonder, the Midnight’s 2016 Endless Summer. Saxophone crying out from the other room.

-

habitations

Language ergonomic studies: Possession: Ruby, JavaScript, D/s, Mycology

Tuesday October 31, 2023

There exist happy little clouds of coincidence when studying new languages. And not just across the computer ones, mind you!

Ruby and Indonesian share a frugal brevity and disinterest in flairs of punctuation when managing possession.

Itu bukuku.vs

That's my book.And…

me = Person.find(my_user_id) my_books = me.booksvs

const me = await prisma.user.findUnique({ where: { id: 99, }, include: { books: true } }); myBooks = me.books;Well, Rails AR query syntax vs Prisma (NodeJS). (Also, did I do a useful interpretation of linguistic possession in a soft coding expression??)

This isn’t YAOFJS (Yet Another Opprobrium for JS). I don’t have a quarrel with English, either. Although I find it often fairly dull when it’s not rescued by the reticulations of regional and immigrant (forced or free) anastomosis of the tongue and mouth (and whatever other maneuvers contribut to nudging the Queen’s own verbage (I’ve forgotten if there is anything before a body without organs, how is it done???)) that take root in everyday speech.

Only a mere 30 pages (of 250) into Make the Golf Course a Public Sex Forest and you run headlong into Raechel Anne Jolie’s musings on D/s, mushrooms, and mouths. There’s probably something here to dwell on with respect to power, colonialism, oral sex, entangelment, Dulcinea Pitagora’s “subspace” in BDSM play (ohhh, wonderful that this term wasn’t entirely pre-figured by Roddenberry’s universe expanse), etc…

Cue Butler’s Xenogenesis, ringing in my ears.

-

habitations

Language ergonomic studies: Ruby vs JS one-liners

Thursday October 26, 2023Coming from JavaScript, I was not expecting Ruby’s

Enumerablemodule to have methods for selectingminandmaxvalues from a collection. Especially with an added parameter that lets you “take” a range starting from zero.For comparison, what if we needed the lowest

xnumber of values from an array of numbers.In JavaScript we’d sort numerically (requiring the conspicuous umbrage a declaring for the umpteenth time a comparison function) and then destructuring out the result.

function twoLowest(numbers) { return numbers .sort((a, b) => a - b) .slice(0, 2) .reduce((a, b) => a + b, 0); }Anyway, written in 10 days.

Matz giving us many ways to do things.

I want to make Ruby users free. I want to give them the freedom to choose. People are different.

From:

🔗 The Philosophy of Ruby: A Conversation with Yukihiro Matsumoto, Part I

def two_lowest(numbers) numbers.min(2).sum endEnumerable methods that can receive an operator as symbol. Glacial autumn breeze whipped up from the 101. I don’t have a brevity fetish, like I know many of you do. Or the tendency to place the one-liner upon the highest of pedestal. It’s the obvious natural-language-style readability of the Ruby here that is simply remarkable.

Achieving this is possible in JavaScript with more fanfare. But, alas, the maintenance cost of dressing up our array in Ruby’s fast casual couture.

class NumberArray { constructor(numbers) { this.value = numbers; } min(n) { function compareNumbers(a, b) { return a - b } this.value = this.value.sort(compareNumbers).slice(0, n); return this; } sum() { function add(a, b) { return a + b } return this.value.reduce(add, 0); } } function twoLowest(numbers) { return new NumberArray(numbers).min(2).sum(); } -

habitations

Rspec vs js, let vs let

Thursday October 26, 2023I love comparison pieces like Steve Hicks' article What JavaScript Tests Could Learn From RSpec that juxtapose the syntaxes, rules, shibboleths of two programming languages. Comprehension comes more quickly.

Interesting. In describing the perceived advantages of rspec’s

letmethod to declutter code, I noticed Hicks fails to mention this little maneuver he performs of encapsulating aresultfunction –resultandgetResultfor rubes and javs respectively. It would seem this strategy is a given for him. But in my experience it’s one I’ve shied away from in JavaScript tests – especially any of non-trivial sophistication.I prefer the subject invocation repeated in each

itblock. I find this repetition comforting. Like:describe("when the first value is negative", () => { describe("when the second value is negative", () => { it("returns a positive number", () => { const second = -3; const result = calculator.multiply(first, second) expect(result).toEqual(3) }) }) describe("when the second value is positive", () => { it("returns a negative number", () => { const second = 3; const result = calculator.multiply(first, second) expect(result).toEqual(-3) }) }) })Also, unlike what I’m doing above, Hicks is declaring the value of

secondinbeforeEachblocks to hint at how more sophisticated JS tests would be written with heavier logic to execute before one or more test runs within the same scenario/context. In practice I find you often do both in JS tests – do preparation inbefore*blocks and declare single-use constants withinitblocks (vs re-assigning/re-using).Generally I keep re-assigning/re-using variables to a minimum if they can be localized – it is confusing to follow in JS. Beware of cross-contamination. Pollination. Sex.

But why: for some reason the concise syntax of let seems easier to track. Lack of curly braces?

This doesn’t really bother me though:

let sharedVar; before(() => { sharedVar = ... }) describe(() => { it(() => { sharedVar = ... }) })Is rspec

letbetter than JSlet? Of course of course, it depends. le sighEven rspec maintainers caution us, as Hicks notes. Convenience always carries a caveat in programming. This is a substantial pillar of the “readability” discussion: durability of convenience.

Note: let can enhance readability when used sparingly (1,2, or maybe 3 declarations) in any given example group, but that can quickly degrade with overuse. YMMV.

Cool find: givens. Shoutout to the translators, the transcribers, the interpreters.

-

habitations

With Anne-Marie Willis, professor of design theory at the German University in Cairo.

Wednesday July 5, 2023Another world is possible. But what of worlding? How to world?

When a Farley’s barista is a strong current; wow the level of shine escaping her mouth. And I loved it. The slightest tickle of maple syrup was a great recommendation!

Per usual I sent myself something to read and didn’t cite the source so I’ve been having that weird kind of drifty, but tethered, Pong ball experience. Was it mentioned by someone in a Paper in Systems discussion? Maybe a content of the fediverse’s systems thinkers briefly held in place by my thumb.

As I like to say (with often different verses), I do software development because it’s a phenomenological wonder of people, text, code, time travel, non-determinism – and always pluckily avoids fitting neatly into capitalist fetish.

Like, software projects (already “bad” (incomplete, inaccurate) lexicon) of any substantial scope are only delivered on time accidentally.

Frederica Frabetti supports this axiomatic chiste of software, noting in her book Software Theory:

“The central problem of software development is thus the impossibility of following a sure path in which a system is completely and exhaustively specified before it can be realized.”

and

“Stability is something that happens to the system, rather than being scheduled and worked towards.”

Look how software was virtually helpless to build complex systems that are designed to become eventually consistent.

For me, software is philosophical-ing. An ontological milieu. Therefore Willis' essay is soooo good for those of us who are philosophical-ing, but not academically trained – and who can drift into this kind of theoretical reasoning easily, especially in an essay like Ontological Designing which onboards us you into hardcore theory with a ton of grace. Willis doesn’t need to spend her initial breaths on defining “ontological” and and Heidegger’s “being”, and (re)introducing us to the failure of the Western metaphysical tradition. But she is generous; and that’s likely the point. A broader appeal.

Which, in turn, helps the mind Pong around during an essay about pervasive push and pull.

I’m reading this essay about how we effect social change but also thinking through the systems reasoning and how it vibrates into software ontology. Well, there are references to IT infrastructure and other “equipment” of our present epoch – though they do not supersede other kinds of equipment. For Willis, our contemporary technologies can function both as examples of any designed material, as well as juxtapose with the immaterial (organizational structures, administrative systems, etc…) to demonstrate how they are equivalent as objects/outcomes of the ontological design circularity/looping, bi-directional reach – Heidegger’s grabbing Cartesian dualism by the shoulders and shaking them. (“Ontological designing refuses such one dimensional understandings of (human) being-in-the-world, which are worn-out fragments of enlightenment thinking and Christian morality sloppily stitched together.") As Willis notes, Heidegger themself reaches for the simple household jug to work with in his pursuit of how things, thing.

“The jug gathers and unites these.”

These being: water, wine, sky, earth. Gathered/outpoured.

But she talks about tech in a way that tickles, for sure. Especially it’s excess as it frenetically infinite loops rather than unlocks potential:

Rather than inducing us into a world of multiple creative possibilities (as software advertisers would have it), [computers] design us as users into their horizons of possibility – which by the very nature of horizons (in Gadamer’s sense) always have a limit. In fact, the proliferation of options within even a basic operating system or software application becomes a tyranny of choice, a maze of seemingly endless possibilities, a dazzling instrumentation for its own sake, all means with no end in sight.”

Maybe free software never will be truly free.

-

habitations

Naur, goodies, 1985 years after Jesus Christ

Thursday June 8, 2023There are so many goodies in Naur, 1985. Filter, some():

- Declares there’s no right way to write software

- (Further) rebukes the scientific method

- Calls out lack of empirical study of software methods

- The programmer as “manager” of computer activity

Thank you ceejbot for further distillations.

-

habitations

Naur, the optimist, 1985 years after Jesus Christ

Thursday June 8, 2023The year is 1985. Certain kinds of optimism abound in programming circles. From Peter Naur’s Programming as Theory Building:

"It may be noted that the need for revival of an entirely dead program probably will rarely arise, since it is hardly conceivable that the revival would be assigned to new programmers without at least some knowledge of the theory had by the original team."

The infamous “shit mouse” bug that I pushed to production in 2018 – which subsequently became an iconic team joke with its own concomitant laptop sticker swag – was the direct result of software abandoned in the wake of absconding team members. Picking up dust-laden software seems like a common occurrence these days, no?

In their reading-with of Naur, Ceejbot offers a valuable remediation technique for deleterious knowledge vacancies. It’s one that I personally strive for in everyday software practice: gross amounts of maintainer documentation.

Don’t waste time documenting what can be seen through simple reading. Document why that function exists and what purpose it serves in the software. When might I call it? Does it have side effects? Is there anything important about the inputs and outputs that I might not be able to deduce by reading the source of the function? All of those things are clues about the thinking of the original author of the function that can help their successor figure out what that author’s theory of the program was.

and

…the program exists to solve a problem, some “affair of the world” that Naur refers to. What was that problem? Is there a concise statement of that problem anywhere? What approach did you take to solving that problem statement? What tradeoffs did you make and why? What values did you hold as you made those tradeoffs? Why did you organize the source code in that particular way? What belongs where?

-

habitations

Power Moves

Tuesday June 6, 2023During her novel workshop on estate planning, Sarah Deluca of Move Money Shift Power poses the question:

Is holding onto control after your death something you want?

If part of our life’s work is to strengthen connection, relinquish power, share power, redistribute, does a Trust actually violate the moves we’ve been making at the speed of small “t” trust? Individualistic posthumous scheming is not the invention of communities, but of corrupted powerfuls. It would seem.

A few days later I found myself in the next Paper in Systems discussion led by Dawn Ahukanna and Shauna Gordon-McKeon. On the table was Shauna’s essay Interpretive Labor: Bridging the Gap Between Map and Territory. Wherein you’ll find a rich investigation – and then interrogation – of the lopsided power distribution between those that labor to imagine, construct models, maps, and those that sit with the effects of the implementation – who interpret, navigate, bridge the chasm between theory and material outcomes.

I was called in. Software engineers do wield an outsized power from behind the desk. Although the tower isn’t deathly bleached, because 1) we do some interpretive labor at the seam between business requirements and software building (system design, theory of, code/text writing), and 2) failures reverberate back to us pretty hard (midnight pages) – at least more acutely than through the beauracratic layers. (Do the capitalists get to feel much of anything?) Nonetheless, we probably aren’t the ones screaming during the scream test. What do we do with this power?

Jorge Luis Borges - Self Portrait -

habitations

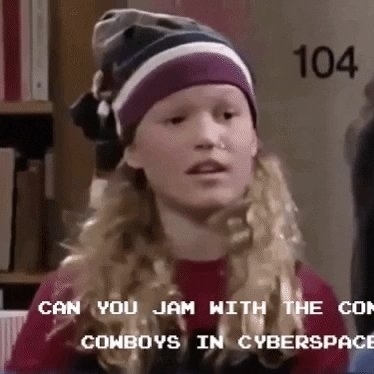

Can you jam with the console cowboys in cyberspace?

Saturday April 29, 2023No. You can’t. Not yet.

It’s quite possible that my work in computers today is a last ditch to actualize a childhood fantasy of solving neighborhood crimes with my friends in Brooklyn with the help of a friendly ghost that communicates via word processor. You type, then we type, Ghostwriter.

The way she caresses the monitor in this scene: longingly, tenderly, expectant. The arrogant gush of buzzwords. Unalloyed after school cool.

-

habitations

Saturday January 8, 2022Redis Poem

set things

set them to expire

set them to be exclusive

set them to expire, be exclusive(inspired by a chat with mike b)

-

habitations

Well-known ways that JavaScript coerces objects to strings

Monday January 25, 2021It’s a proper cliche of commercial computer programs to bind audit reporters alongside code at important relay nexuses. There are a panoply of reasons to extract information this way: producing audit trails for legal compliance, gathering product insights, collecting debug traces for quality monitoring, etc… The trick is wresting useful emissions from these reporters when they are working within a dynamically typed language that might unabashedly take liberties on ambiguous data types – like JavaScript. Typically you design your loggers to capture a Good-Enough Facsimile™️ of the current state of the world for later analysis1. This likely means grabbing the subject of the current operation as well as felicitous supporting actors like application and session context, and user info, in Object form. (Already a funky transmigration of Subject/Object). Oftentimes the subject is the user – we love to know you, and know to love you. But, if we may clasp hands and walk/skip through a hallucination together into a fantasy e-commerce example, we can explore logging and value coercion of a familiar Order class. What would be a blog about building websites without a bit of retail market magic.

Let’s start with the following modest invention which terraforms1 a theoretical backend piece of an online shop. And let’s imagine that from time to time, orders that come into this shop become stuck during the fulfillment process – perhaps for a variety of reasons because we are dealing with, say, medication items. Insurance claims may be rejected, the doctor or pharmacist discovers a conflict between the patient’s health attributes and the meds. In such a scenario, this code attempts a fully-automated retry of the unstuck order, and in due course, at important nexuses, passes relevant contextual data to log handlers. From there other bots or real-life persons can take over.

const oldestStuckOrder = await query(orders, { filter: 'oldest_stuck' }); const logData = { order: oldestStuckOrder, userInfo: { ... }, sessionInfo: { ... }, }; logger.info(`Start resolving stuck order: ${logData}`); const reason = determineStuckReason(oldestStuckOrder); if (reason) { const result = resolveStuckOrder(oldestStuckOrder, reason); const logDataWithResult = { ...logData, reason, result, }; logger.info(`Finished resolving stuck order: ${logDataWithResult}`); } else { const logDataWithReason = { ...logData, reason, } logger.error(`Unable to resolve stuck order: ${logDataWithReason}`); }There are three points in this code where I’m sending a rich object into a logger service which only accepts a single string argument. In this fantasy, this particular team of software developers just wants the logger service API as simple and straightforward as can be: unary and stringy. I hope this code reads naturally, that it’s similar to something you’ve seen before. And…hopefully your gears are turning already and you are starting to see with me 𓁿; and you are beginning to feel awkward 🙇🏻♂️ about what representation for

oldestStuckOrderorlogDataWithReasonthis gussied-upconsolefunction will display. Won’t interpolating complex objects inside a template string force the engine to implicitly coerce the object into the obstinately churlish[object Object]?Scene opens, your PM marches up to your desk with a bemused frown:

PM: What happened with Order #555-5555

Me: The problem is that this order got into an illegal state!

PM: Which order?

Me: Oh, the object object order.

PM: 😒JavaScript is funky-beautiful because the dynamic typing nature of the lang means you can smush values of mismatched types into the same context and your program won’t catastrophically fail when you run it. Loose assumptions loosely held, I guess. When we write JavaScript, we often take this for granted, and we exploit it for good. You probably know and understand intuitively or consciously the bunch of common “contexts” where the language affords this failsafe. Here’s a general breakdown:

- expressions using arithmetic operators

- comparison operators

- text expressions (

ifstatements, the second clause of aforloop handler, the first clause of a ternary operator) - interpolated strings.

That last one is case in point; we can send an object into a string context –

Finished resolving stuck order: ${logDataWithResult}– and get something workable out the other end:const logDataWithResult = { prop: 'prop', anotherProp: 'anotherProp', }; console.log(`Finished resolving stuck order: ${logDataWithResult}`);And there it is. Workable (quite generously). The famed proterozoic, bracketed notation of familiar churlish conceit and “bad parts” motifs. Obviously this not the best guess we hope the engine to make when executing our logging function – we have lost all that rich order and user data! Our compliance trail is meaningless. But we shouldn’t despair quite yet. I’m happy to share that JavaScript exposes many an API for developers to control the return value of type conversions. If JavaScript is anything it’s a fairly open language. Not necessarily open for expansion to the extent of, say, Clojure’s macros. But all things being mutable objects (with a few untouchable properties) and a handful of scalar values, you have a good deal of freedom. For coercing objects to string, the most famous method is probably

toString(). In fact, JavaScript dogfoods its owntoString()for object -> string conversion automatically in all those common contexts listed above. Wheneverthe object is to be represented as a text value or when an object is referred to in a manner in which a string is expected – MDN contributors

Like between backticks.

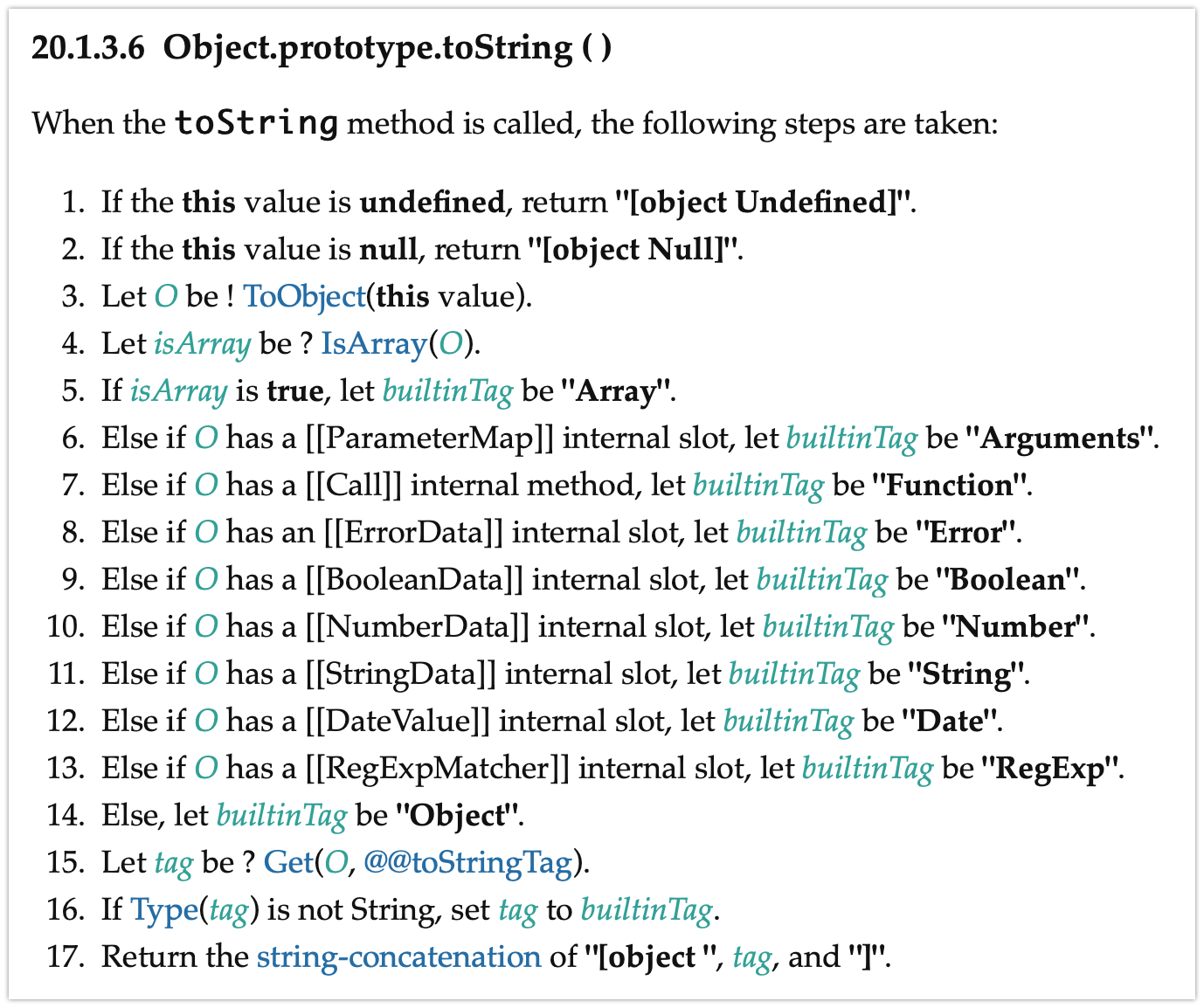

Now, if a fellow developer in our dreamy medication retail shop codebase has not already come along and monkey-patched the

Orderobject’stoString()method, the default conversion algorithm rules defined forObject.prototype.toString()from the ECMAScript® 2021 spec section 19.1.3.6 will kick in. Yep, we are going there. The algorithm is actually pretty easy to understand (though here’s to hoping your browser of choice plays along!). I invite you for a glance:Can you see where

[object Object]comes from? If the unfamiliar dialect is a bit intimidating for this casual Sunday morning read, here’s what the above algorithm would like if we implemented it in JavaScript4:import { SecretInternals } from 'secret-internals'; const internalSlotsToTagMap = { ParameterMap: "Arguments", Call: "Function", ErrorData: "Error", BooleanData: "Boolean", NumberData: "Number", StringData: "String", DateValue: "Date", RegExpMatcher: "RegExp", } function toString(value) { if (value === undefined) return '[object Undefined]'; if (value === null) return `[object Null]`; let builtinTag; const innerValue = innerValue = SecretInternals.Object.box(value); const isArray = isArray(innerValue); if (isArray) { builtinTag = 'Array'; } else { for (const [key, value] of Object.entries(internalSlotsToTagMap)) { if (SecretInternals.Object.hasInternalSlot(innerValue, key)) { builtinTag = value; } } } if (!builtinTag) { builtinTag = 'Object'; } const tag = SecretInternals.Object.get(innerValue, '@@toStringTag'); if (tag !== string) { tag = builtinTag } return `[object ${tag}]`; }For actual objects, not object-like things (Arrays), the algorithm falls through to step 14 where a temporary referent called

builtInTagreceives the valueObject. This built in tag is later used as the second part of the converted argument value.I had a JavaScript joke but it’s [object Object]

— Greg Meyer (@grmeyer) July 26, 2020Despite the sarcastic jabs from the peanut gallery, what else would we expect the language to do. JavaScript’s unintentional emissions were designed for a platform that projects them through UIs for consumption by masses of retinas of human people – it’s Good Enough. The language keeps your program running with a type guess and leaves the contents of your value alone. It doesn’t, like, radically unpack and serialize your contents to JSON (what compute or privacy costs might lurk!); or try to set the built in tag to the left-hand side of the last variable assignment statement: what a drastic move, the language doesn’t work this way: variable names are not synonymous with types! Variable assignment is void of any tautological binding! JavaScript just lets you take matters into your own hands.

Until very recently I wasn’t aware of any techniques beyond an Object’s built-in

toString()property to futz around with type conversions. But apparently there are a few modern well-known Symbols5 that have entered the language to help. I’ve compiled an example with four different APIs I could track down. Any of these would play nicely for logging libraries, though the last only works in node, and there are nuances to each that you must consider for your use case.// 1. Override toString() (js, node) class Chicken { toString() { return '🐓'; } }var chicken = new Chicken(); console.log(

${chicken}); // 🐓// 2. Symbol.toStringTag (js, node) class Chicken { get Symbol.toStringTag { return ‘🐓’; } }

let chicken = new Chicken(); console.log(

${chicken}); // [object 🐓]// 3. Symbol.toPrimitive (js, node) class Chicken { Symbol.toPrimitive { switch (hint) { case ‘number’: case ‘string’: case ‘default’: return ‘🐓’; default: return null; } } }

let chicken = new Chicken(); console.log(

${chicken}); // 🐓// 4. util.inspect.custom (node) const inspect = Symbol.for(‘nodejs.util.inspect.custom’); const util = require(‘util’);

class Chicken { inspect { return ‘🐓’; } }

const chicken = new Chicken(); console.log(chicken); // 🐓 console.log(

${chicken}); // [object Object] util.inspect(chicken) // 🐓I haven’t formally surveyed this, but I can report anecdotally that of my current team of ~25 engineers who work on a universal JavaScript application, overriding

toString()is the most commonly preferred strategy. My sneaking suspicion is that many JavaScript developers are not aware of the more contemporarySymbolmethods/properties, even though these methods have been available in major browsers/node for ~4-5 years. Or maybe it’s simply a matter of many backend devs coming to backend JS from other languages and server environs. From what I understand, node has just started to finally emerge in the past few years as fit-enough for prod. JavaScript is vast territory, quickly expanding, in multiple runtimes – it takes years.As for

nodejs.util.inspect.custom, I haven’t been around node land long enough to know if its usage is idiomatic.Still, preference for

toString()may not simply be an issue of keeping up with the JS Joneses. As shown above, the outcomes of these different strategies are not identical. What’s more, to layer on the complexity, these options aren’t wholly independent. In fact, JavaScript combines these strategies together under the hood. Did you notice what was going on in step 15 of the conversion algorithm above? The spec requires thatObject.prototype.toStringlooks up the@@toStringTagsymbol property on the object – these symbol members are in the DNA sequence now. When we take control back, understanding the spec is quite key: we can avoid mistakes like combining these two options since overridingtoString()always take precedence. For example:class Chicken { get [Symbol.toStringTag]() { return 'Base 🐓'; }toString() { return ‘This is a 🐓’; } }

class JungleChicken extends Chicken { get Symbol.toStringTag { return ‘Jungle 🐓’; } }

const chicky = new Chicken(); const jungleChicky = new JungleChicken();

console.log(

${chicky}); console.log(${jungleChicky});However, say I were interested in simply tagging the string representation of my object to protect exposing its value contents, but still present a semantically rich identifier to consumers. This would help us express a desire to maintain the default bracket output –

[Object..]– with the prepended “object” type to maintain some consistency with how objects are stringified in our code. In that case, leveraging the well-knownSymbol.toStringTagproperty would be the way to go. For example, the following logger from our e-commerce imaginary might obscure private user data like this:// .../jsonapi/resources/user.js class User { get [Symbol.toStringTag]() { return `User ${this.id}`; } }// Somewhere else… logger.error(

Unable to resolve stuck order: ${logDataWithReason}); // Start resolving stuck order: // { // order: {…}, // userInfo: [object User:123456], // sessionInfo: {…}, // };Your next option, empowering even more fined-grained control, is adding a

Symbol.toPrimitivemethod to your object.Symbol.toPrimitiveis a main line into the runtime’s coercion processing. After playing around a bit in a browser and a node repl, I’ve noticed that this Symbol will precede over a providedtoString()override.class Chicken { toString() { return 'This is a 🐓'; }get Symbol.toStringTag { return ‘🐓’; }

Symbol.toPrimitive { switch (hint) { case ‘number’: case ‘string’: case ‘default’: return ‘This is a 🐓 primitive’; default: return null; } } }

const chicky = new Chicken(); console.log(

${chicky})By using

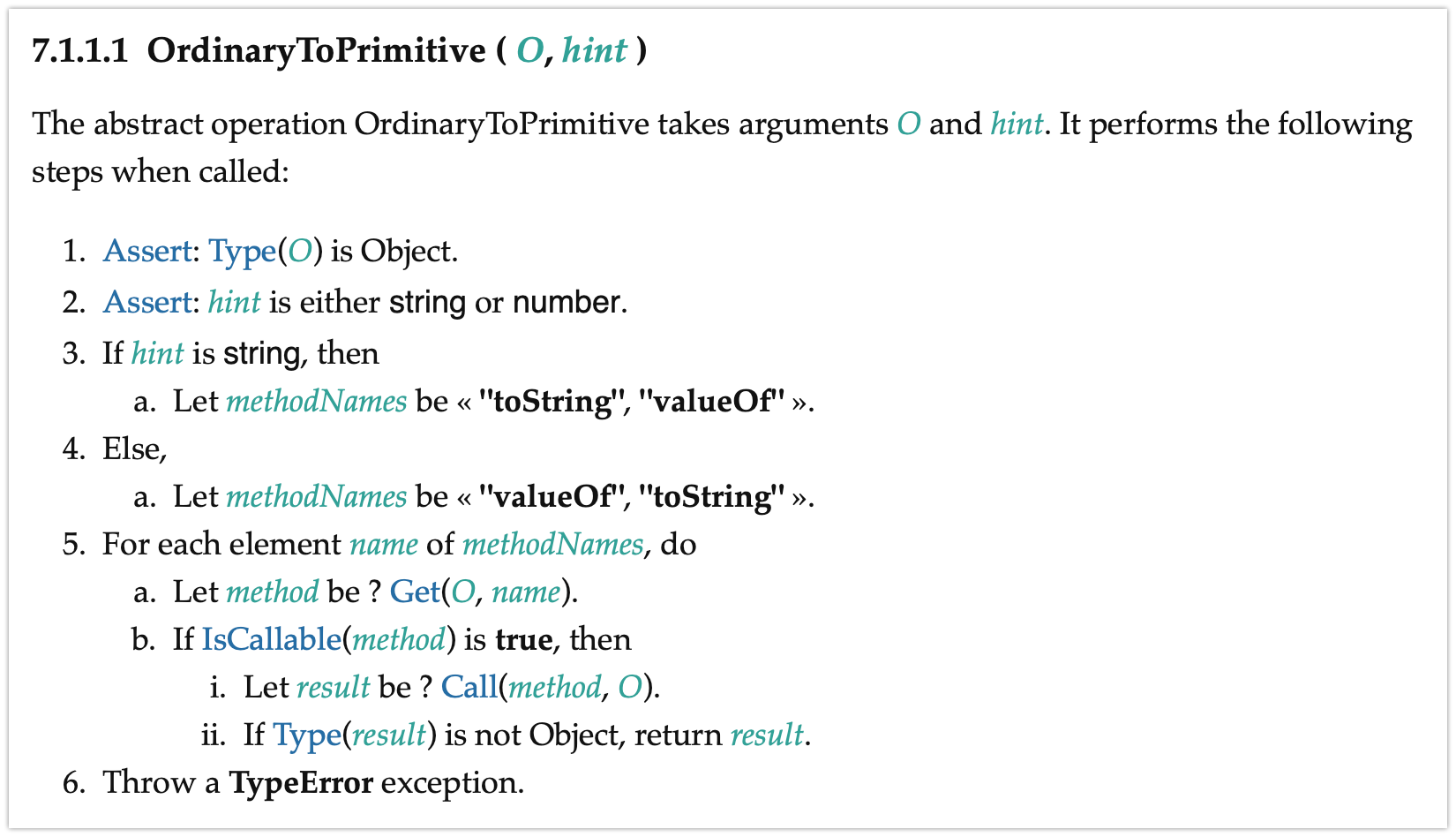

Symbol.toPrimitive, you’re basically instructing the runtime to LOOK HERE for all its object coercion preferences. What’s more, beyondSymbol.toStringTag’s mere label augmentation, you get a powerful indirection2 to handle all primitive type coercion scenarios1. You’re also overriding internal language behavior which – I was surprised to learn – effectively resolves how to order the calls forObject.prototype.toString()andObject.prototpye.valueOf(). Flip to section 7.1.1 of the spec to see how theToPrimitiveabstraction is designed to call a further nestedOrdinaryToPrimitiveabstraction for decision-making:Translated for comfort:

import { SecretInternals } from 'secret-internals'; function ordinaryToPrimitive(object, hint) { if (SecreteInternals.isObject(object)) { throw TypeError; } if (hint !== 'string' || hint !== 'number') { throw TypeError; } let methodNames = []; if (hint === 'string') { methodNames = ['toString', 'valueOf']; } if (hint === 'number') { methodNames = ['valueOf', 'toString']; } for (methodName of methodNames) { if (SecreteInternals.isCallable(methodName)) { const result = SecreteInternals.call(object, methodName); if (SecreteInternals.isNotObject(result)) { return result; } } } throw TypeError; }I think I like the idea of using these well-known symbols for custom object -> string representations, if for the collision protection alone.3 What would it be like to reach for the powerhouse of

Symbol.toPrimitiveto hijack the runtime from eventually calling through toObject.prototype.toString(). Furtive, conspiratorial whispers to the interpreter 🤫. Even a partially implemented reducer will do, as I demonstrate above in my chicken example above: the switch statement can gracefully sidestep other type hint cases and only target the string hint case. But is grabbing forSymbol.toPrimitiveoverkill?toString()is tried and true and a pristine greenfield function block without arrogant “hints” and a naive switch statement without pattern matching 🙄 (are we there yet?). Could there be non-trivial DX cost of confusing other developers if the other primitive case statements are fall throughs?

1: Whenever I think about how software tries to capture its own understanding of the world it creates, I’m brought back to systems thinkers like jessitron:

"We don’t expect the world to have perfect consistency. Yet we wish it did, so we create facsimiles of certainty in our software.

It’s impossible to model the entire world. Completeness and consistency are in conflict, sadly. Still, if we limit “complete” to a business domain, and to the boundaries of our company, this is possible. Theoretically.

2: By “indirection” I really mean to invoke Zachary Tellman’s exegesis of semantic drifts in software industry lexicons from his book Elements of Clojure. His work is a really nice refinement:

Indirection provides separation between what and how. It exists wherever "how does this work?" is best answered, "it depends." This separation is useful when the underlying implementation is complicated or subject to change. It gives us the freedom to change incidental details in our software while maintaining its essential qualities. It also defines the layers of our software; indirection invites the reader to stop and explore no further. It tells us when we're allowed to be incurious.

He goes on to discuss that conditionals are one of two primary devices to achieve successful indirections (the other being references). Conditionals are effective because they contain ordered, "closed" decision-making mechanisms that avoid conflicts; in contrast to tables with indivduated keys.Conditionals solve conflicts by making an explicit, fixed decision. Where conflicts are possible, we use conditionals because they are closed.

3: That’s the primary purpose of these Symbols. For a deeper Support util.inspect.custom as a public symbol #20821 on GitHub.

4: Try not to think too hard about the implications of a JS interpreter written in JS. But, ya know, Atwood’s law; you will, not surprisingly, find JS interpreters for JS out there, many built on Acorn, which, is itself, a parser written in JS. Man alive!

5: I’m bemused and betwixed by this use of “well-known.” Does anyone know the origin of this qualifier?

-

habitations

Language ergonomic studies: Summing ranges and most frequent terms

Monday November 9, 2020Pennies per day (by day count) in Clojure:

(defn pennyPerDay [numDays] (reduce + (range 1 (+ numDays 1))) )(pennyPerDay 30)

Pennies per day (by day count) in JS:

function pennyPerDay(numDays) { return Array(++numDays) .fill(0) .reduce((sum, _, i) => sum + i, 0); }pennyPerDay(30);

Most frequent terms in JS:

const termList = ['term1', 'term2', 'term3', 'term3']; function mostFrequentTerms(arr) { return arr.reduce((result, curr) => { result[curr] = ++result[curr] || 1 return result; }, {}) }mostFrequentTerms(termList);

Most frequent terms in a list with Clojure:

(def term-list [:term1 :term2 :term3 :term3]) (defn most-freq-terms \[terms\] (->> terms frequencies (sort-by val) reverse)) (most-freq-terms term-list) -

habitations

Language ergnonomic studies: 52 card deck

Monday October 19, 2020Javascript:

const cards = () => ['♥','♠','♣','♦'] .map((suite) => (['2','3','4','5','6','7','8','9','10','J','Q','K','A'].map((card) => suite + card)))Clojure:

(defn new-deck [] (for [r [\♥ \♠ \♣ \♦] s [:2 :3 :4 :5 :6 :7 :8 :9 :10 :J :Q :K :A]] [r s]))💻

-

habitations

Zoom Doomed

Wednesday September 30, 2020Not a day goes by when I don’t witness the over signifying of “meeting”. “Meeting” invokes a collective sigh for laborers since a better way seems a fairy tale. As a consequence, possibilities for elevated communication are damaged. But a meeting is so much more:

Calling the coordinated exchange of messages or moderated access to shared material (like a blackboard) a conference or meeting seems to neglect a factor that is rather important in meetings. Often the explicit exchange of messages is relatively unimportant compared with the development of social relations in the group of participants that happens simply by the fact that they are close to each other as humans for a while.

Thus, we may be Zoom doomed. Perhaps. We need to hire facilitators and train our people in facilitation. It’s a technical skill that most technologists lack, profoundly.

-

habitations

Not so byzantine algorithm studies: Using math to deliver your medication

Wednesday September 9, 2020Like many commercial software developers, math plays a more sporadic role in my day-to-day work. That said, I certainly wouldn’t blithely demote knowing math below other techs like programming languages, web frameworks, and principles of software design/architecture; which already sets up a false dichotomy, anyway.

Math presents a beautiful core proposition to software developers. Through its powers of abstracting and reducing real-world dynamics into expressable, repeatable, sequential logic – which we can be lifted easily into our favorite coding grammars – math can be the companion dynamo we need for achieving levels of faithful verisimilitude for real world complexities (Keep It Savvy Stupid).

Italicizing knowing math is totally an intentional problematization to mess with the idea that knowing math is narrowly scoped to demonstrating technical prowess of calculation or proofing. I think software engineers can, and should, consider a relationship with math as knowing enough math; like, an ontological relationship with math’s formalism and general principles. Math The Good Parts, so to speak. Otherwise the bravado of equating math and problem solving or math as “hard” (dick) science of some kind is doomed to engender an axiomatic blur that will gate keep devs who are not deemed fit for lack of educational background or interest in fiddling with greek letters. Keep these gates wide open, por favor!

(I also think the focus on calculation in my early schooling, alienated from everyday material uses, made me quite Meursault for it – perhaps why I don’t feel the need to champion for being mathy. It took entering commercial software life much later in life to see how the dynamo helps us make stuff and therefore able to consider embracing math (and being embraced by math) again.)

Our praxis: we can coopt knowing math to ramify similar to our desire for Senior Engineers™ to be T-shaped with their knowledge of the stack in order that they can press more heavily on architecture and design. For example, we can desire for React developers to:

- Understand just enough graph theory to see component architectures as graphs;

- Understand the implications of data pipelining and message passing in a graph (bonus points for being a student of Christopher Alexanders' semilattices)

- Or understand just enough category theory to understand the heritage and benefits of composability of first-class functions – is JS even fun without a bit of FP? What else?

This is all just to say that some business problems map really well to higher-order maths and devs should be prepared for it, at least through the design phase. Again, once we sit down to write code, we can easily offload the fastidious implementation details; those few lines of copy|paste from the math deep state.

For example, take a common e-commerce retail problem like Distributed Order Management (DOM). Modern omnichannel selling combined with cloud computing open a broad field of possibilities to achieve low-cost order fulfillment. Affine cost structures – resulting from variable/variadic shipping dynamics – will increase complexity as an ecommerce company scales. We’re talking about quite a bit of real-world complexity to model and adapt to.

What, then, when we’re tasked to create an algorithm to satisfice a version of this problem where you have these rough requirements:

- Orders must be completely fulfilled

- There will be variant shipping costs per supplier

- Orders can be fully splittable by a supplier

This kind of thing will break your brain once you start to grasp for a rational boundary to contain the argument ramifications: supplier availability, supplier inventory availability, etc…

I was presented with a challenge like this recently and it took me a couple hours just to understand what this problem domain was; like, find my way to DOM through off-by-one google searches; a true StumbleUpon revival. Because I didn’t know the math well-enough yet. Google is pretty fast though, and within minutes I practically broke out in a sweat after discovering multiple long-winded computer science papers written on the topic filled with intimidating mathy notations. Then more OMGs and mon dieus as I careened sharply into Cantor’s theorem and Set Theory. Wait, am I doing math? Oh mersault!

More and more it seemed a fantasy that I’d be capable of solving Amazon within a reasonable amount of time because this gestalt was increasing aggressively. Nonetheless, after some deep breaths and patience recoup and having worked with enough devs without math experience, I began to inculcate to this world and realize any solve was going to be an approximation of some sort and probably wouldn’t require me to crack open a text book; there was no silver bullet or excavation of secret proofs. Rather, this whole class of optimization maximations applied to fulfillment problems is a rigorous academic field, but when it meets the metal it softens and warms for Good Enough™️ programming. Each potential brute force linear assignment or dynamic programming algorithm was discarded. My inputs couldn’t be structured into a decision table or cost matrix amenable to path finding, traversal, or Cartesian production. Which meant I could rule out potentials like Kuhn’s Hungarian algorithm. In fact, the scope was something more akin to a set cover or networking problem – still a brave new world, but less and less unbounded the more I scoured the web. Ulimately, my task was gonna be something toward imagining all probabilities between order items and suppliers, and then reducing these matches against cost constraints. “All probabilities” was a strong clue.

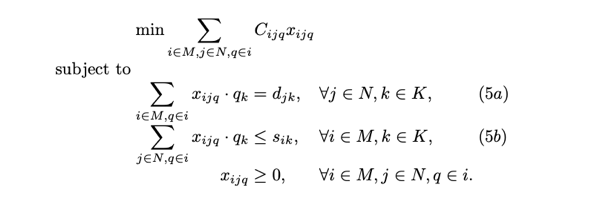

So, a bit surprisingly for someone not used to needing math everyday – and certainly not trying to fuck with this:

Eq. (5a) ensures every order is fulfilled, and (5b) limits service provided by a store. I soon found myself drifting at a comfortable altitude through Probability and Combinatorics with the help of other cow path pavers – we are community-taught developers, after all! – crafting a studied, yet heuristic, approach from where I could thread my inputs through techs like combinations and permutations to make some educated guesses.

The general step-rules of algorithm gradually accreted into something resembling following:

- Generate all possible combinations of order items

- Order items are unique, therefore we are working with a Set. We can therefore use a mathematical definition of a Powerset and create a function which outputs a set of all subsets of any set S:

powerset([A, B, C]) === [[A], [B], [A, B], [C], [A, C], [B, C], [A, B, C]]; - Generate all possible combinations of combinations order items that are less than or equal to the number of suppliers

- Effectively take the result of the Step 1 as the input Set for another powerset that only returns combinations of order item splits that can be fulfilled by available suppliers. For two suppliers:

powersetBySize(powerset([A, B, C]), 2) === [ [["A"]], [["B"]], [["A"], ["B"]], [["A", "B"]], [["A"], ["A", "B"]], [["B"], ["A", "B"]], [["C"]], [["A"], ["C"]], [["B"], ["C"]], [["A", "B"], ["C"]], [["A", "C"]], [["A"], ["A", "C"]], [["B"], ["A", "C"]], [ ["A", "B"], ["A", "C"], ], [["C"], ["A", "C"]], [["B", "C"]], [["A"], ["B", "C"]], [["B"], ["B", "C"]], [ ["A", "B"], ["B", "C"], ], [["C"], ["B", "C"]], [ ["A", "C"], ["B", "C"], ], [["A", "B", "C"]], [["A"], ["A", "B", "C"]], [["B"], ["A", "B", "C"]], [ ["A", "B"], ["A", "B", "C"], ], [["C"], ["A", "B", "C"]], [ ["A", "C"], ["A", "B", "C"], ], [ ["B", "C"], ["A", "B", "C"], ], ]; - Generate all permutations of suppliers

- Generate all viable routes by matching between the sized combinations of order items (result of Step 2) to supplier permutations (result of Step 3)

- Basically a fancy zipping computation

- Filter viable routes against both superficial and business constraints like like duplicated suppliers and supplier availability and/or inventory

- Compute the lowest cost route!

Now there’s a well-suited mathy modeling!

Some other thoughts

Combinatoric applications for this algorithm are quite expensive: you can see how the cardinality flourishes pretty fast above, and my example is for a modest 3 order items and 2 suppliers. If those numbers increase by any measure CPU will be tremendously exercised. (I believe the runtimes of these functions may be polynomial?) I can see why optimization becomes an attractively ripe apple for academicians. Quickly glossing, I can imagine optimizing the looping functions to break before completion when satsificing within a range of acceptance criteria; or structuring the data as Iterators and/or piping through transducers to minimize space complexity with lazy or eager techniques.

By the way, JS has pretty underwhelming options for combinatorics. I found one library that I found a bit awkward to use and ended up ditching in favor of a few standalone implementations of

powersetandpermutationsso I could ensure the code would comply with how I was trying to express the above heuristic. Unsurprisingly, Python’sitertoolshas combinatoric functions built in and even provides recipes for common tooling you can build on primitives likepermutations()andcombinations(). For example,powerset().def powerset(iterable): "powerset([1,2,3]) --> () (1,) (2,) (3,) (1,2) (1,3) (2,3) (1,2,3)" s = list(iterable) return chain.from_iterable(combinations(s, r) for r in range(len(s)+1))Or simply import the blessed

more-itertoolslibrary. Of course, this is expected for Python which is heavily developed for the data science world. -

habitations

The loss of logical purity primacy

Monday September 7, 2020

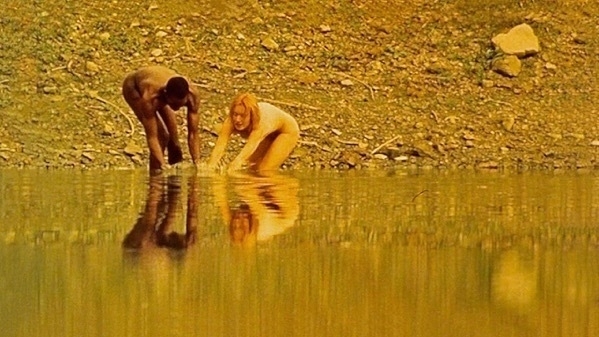

Femi Ogunbanjo & Hanne Klintoe, 1999, The Loss of Sexual Innocence Back in February, my entire notion of expertise and how experts make decisions became cracked after listening to episode 169 of the Greater than Code podcast. While the podcast is rolling I’m discovering my socialized construction of expert – like we pump and grow some kind of muscled query power of an expanding brain-database over time. And that combined with a trained analytical rigor gained through extensive tutelage, study, and practice.